Charlie

A proactive voice assistant concept design

Date:

March 2021 - May 2021

Keywords:

Tangible interaction, Human-centered design, concept design

Tools:

Pen and paper, Miro, Blender

A concept of a proactive voice assistant. The project involved going through a Human-Centered Design process to create a concept of a voice assistant that can provide service without the user prompting it, while making sure the concept was still ethical in terms of privacy.

The challenge

Voice assistant like Google Home or Alexa nowadays need to be prompted by words such as “Hey Google” or “Alexa”. Here, there is an opportunity to make them more proactive, which might even be how voice assistants will work in the future. An example of this is the voice assistant overhearing you contemplating if you should go for a run and then offering to tell you the weather forecast.

While this might be an exciting idea, it comes with some ethical considerations that one needs to keep in mind; if the voice assistant is proactive, at what point does it go too far? When would you want it to offer help proactively, and when does it just become creepy?

The challenge of this project was thus to design a proactive voice assistant that was still ethical in terms of privacy and data collection.

The process

Context of use - interviews and thematic analysis

After the literature review and questionnaires, we conducted interviews. We then went through the answers and looked for recurring answers and themes. We then summarized the interview answers and moved on to creating personas.

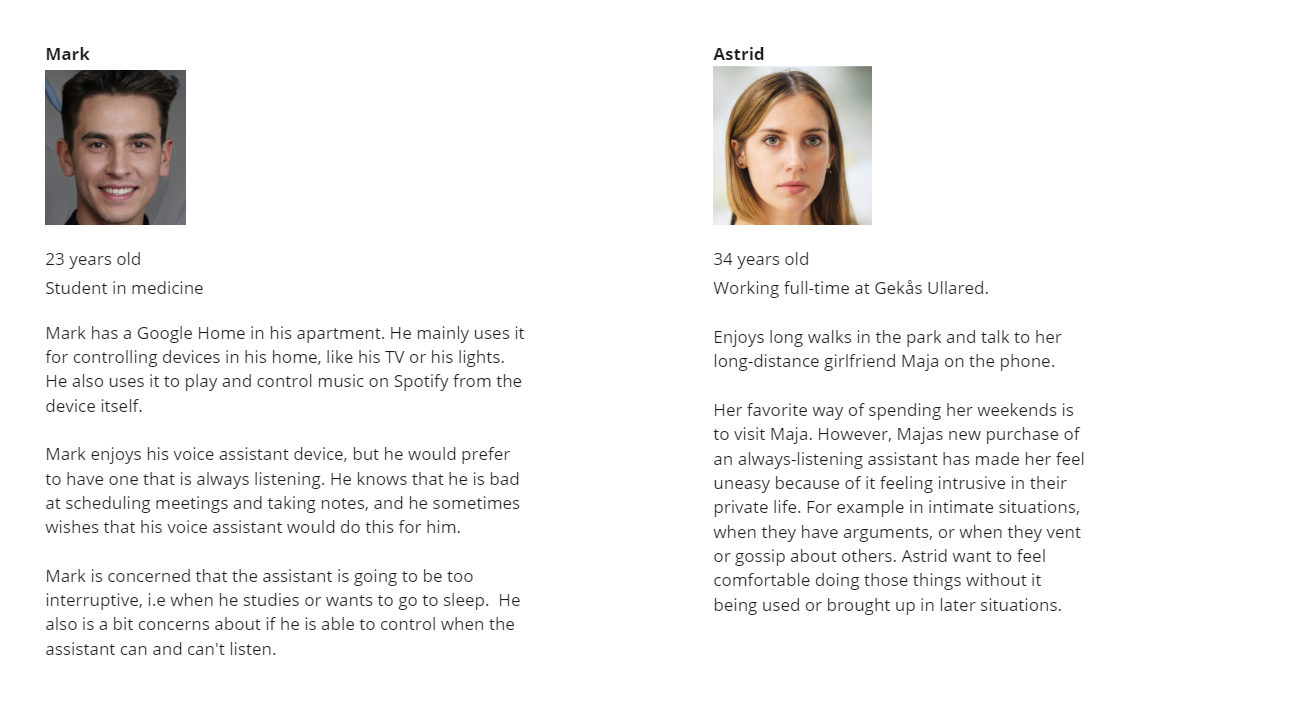

Establishing requirements - personas

With the interviews done, we created personas based on the answers we received. We created two personas: Mark (primary persona) and Astrid.

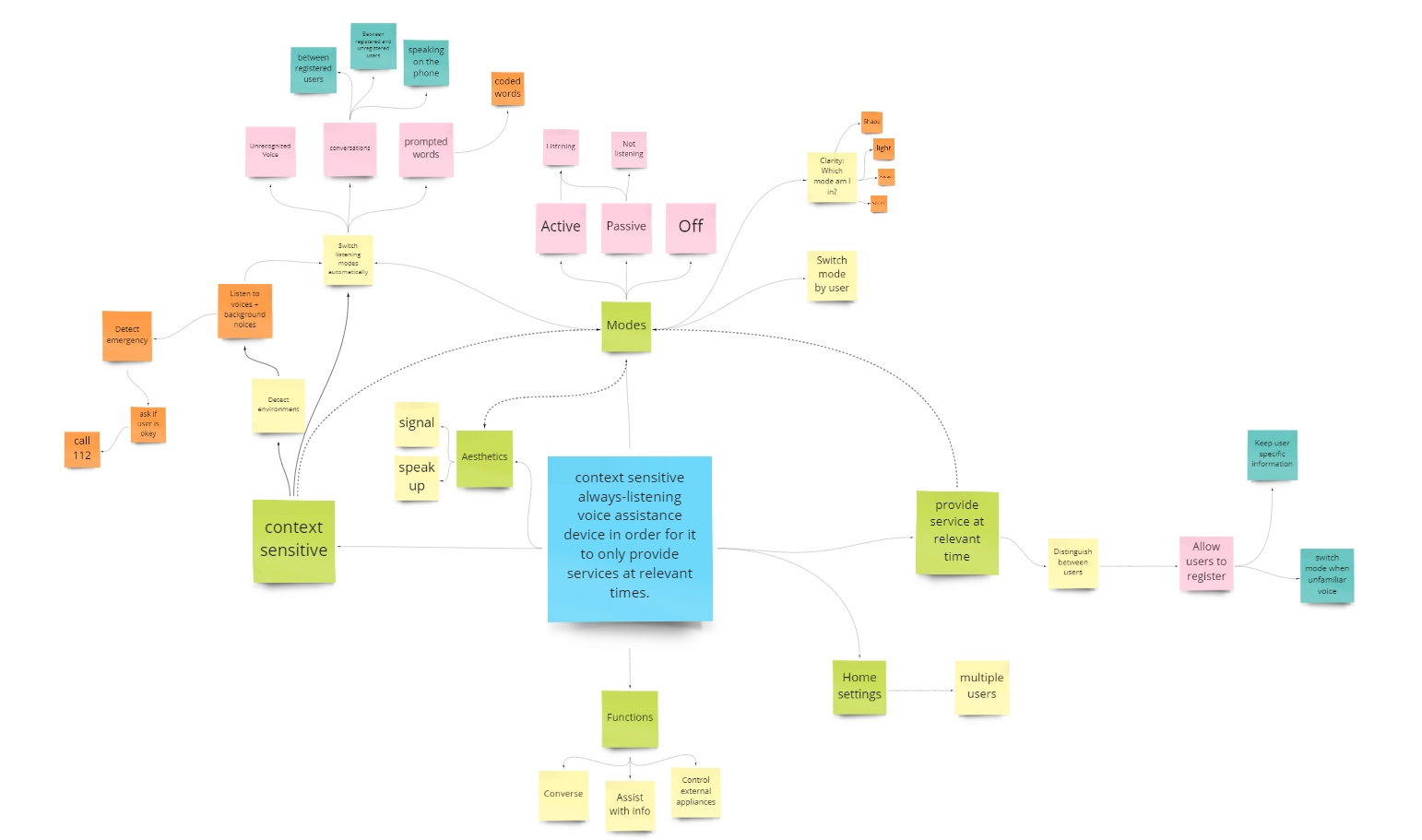

Ideation - mindmapping

After the personas were created, we began ideating. We began with the design question that emerged after our formative research: “How can one design a context sensitive always-listening voice assistance device in order for it to only provide services at relevant times?”. We began with mindmapping in the hopes of understanding what types of ideas might be suitable, with the intention of then moving on to brainstorming once we had a more established set of requirements.

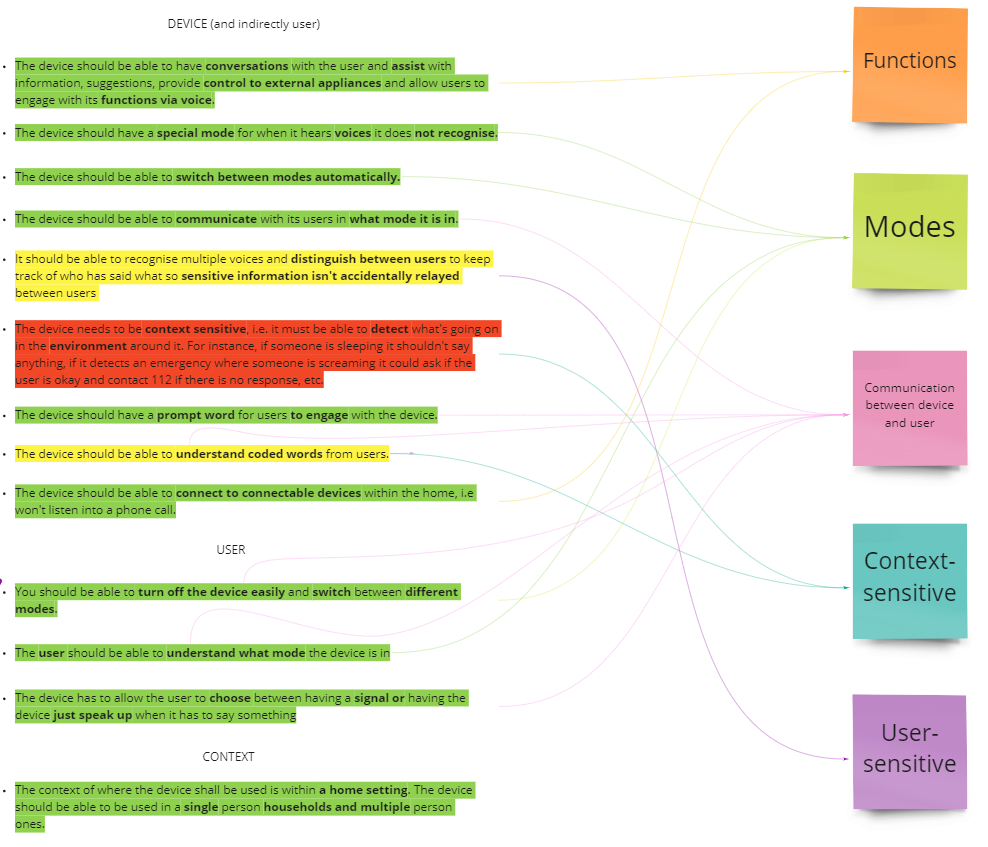

A clearer set of requirements

The mindmapping led us to clearer requirements for the concept. The requirements were divided into categories such as the fact that the voice assistant should have modes, what other functions it should have, that it should be context-sensitive, etc. The requirements can be viewed in the image below.

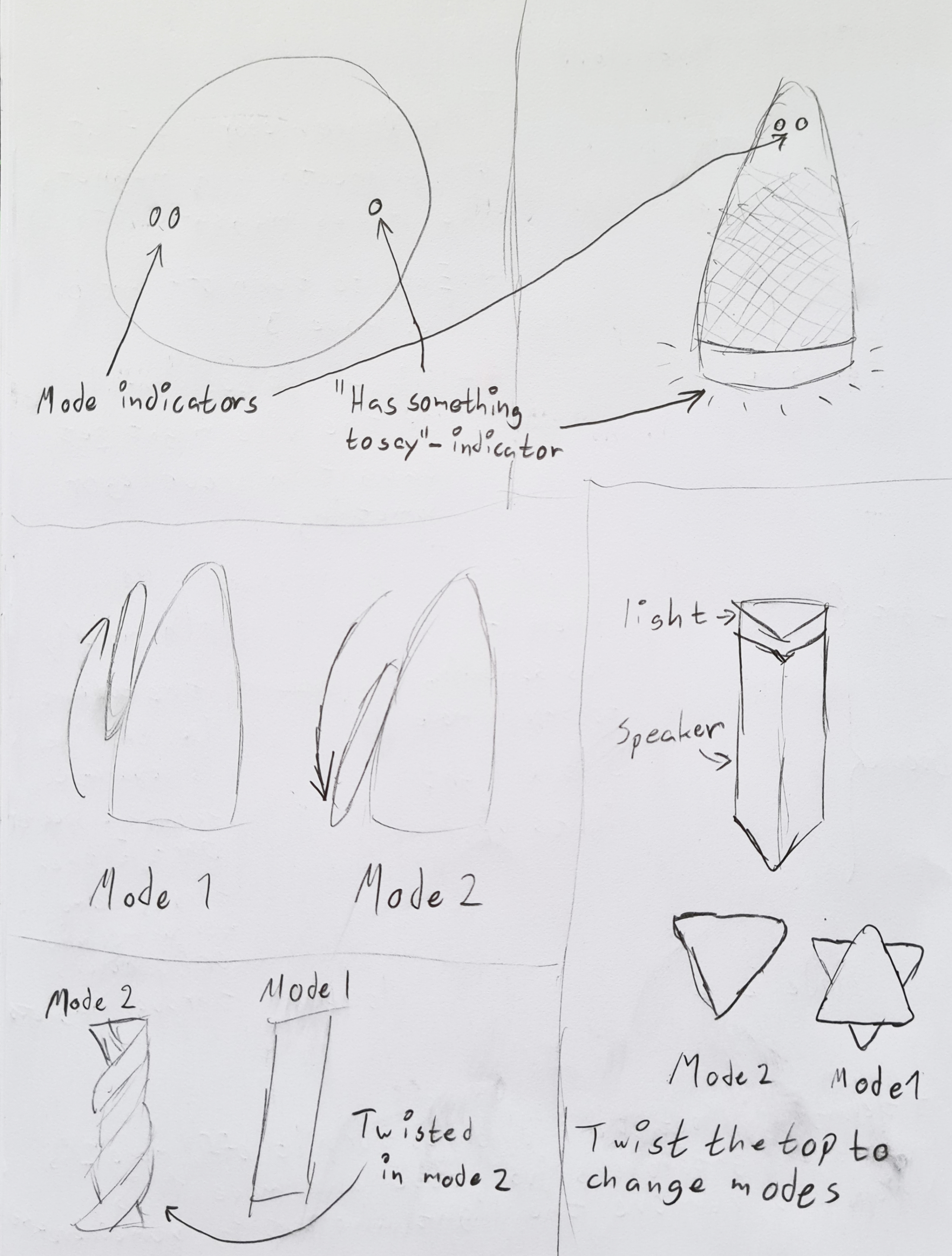

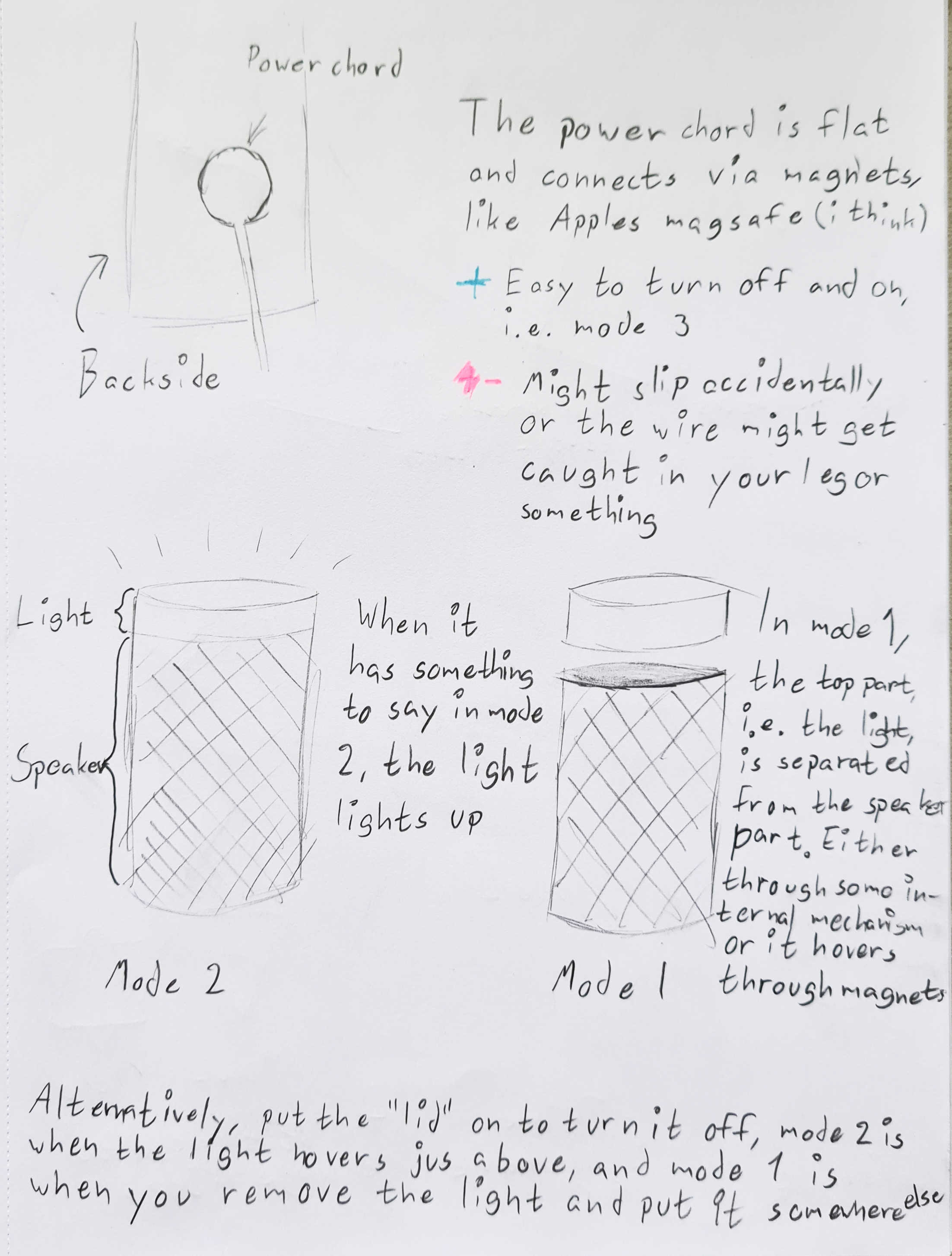

Ideation - brainstorming and sketches

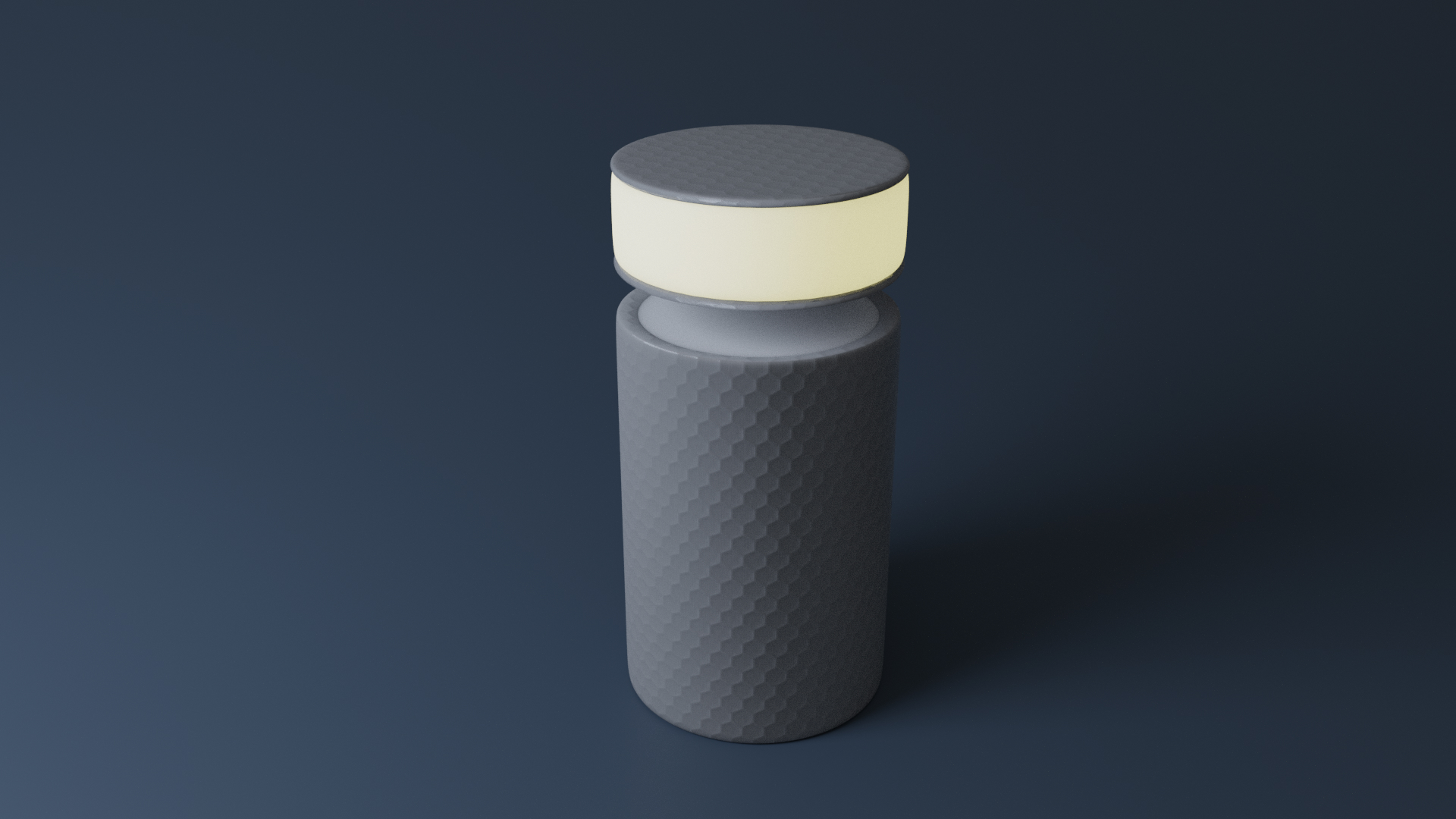

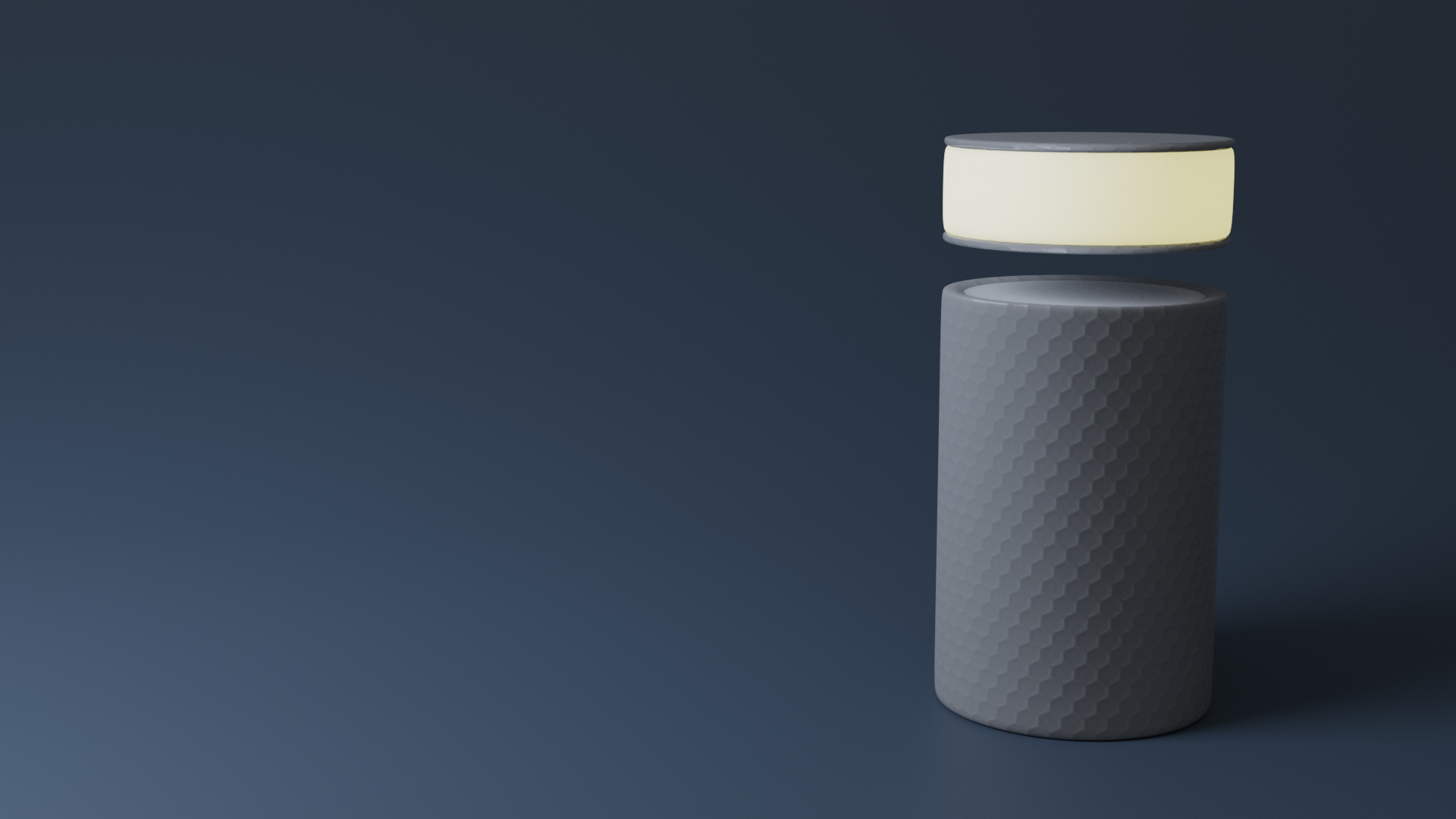

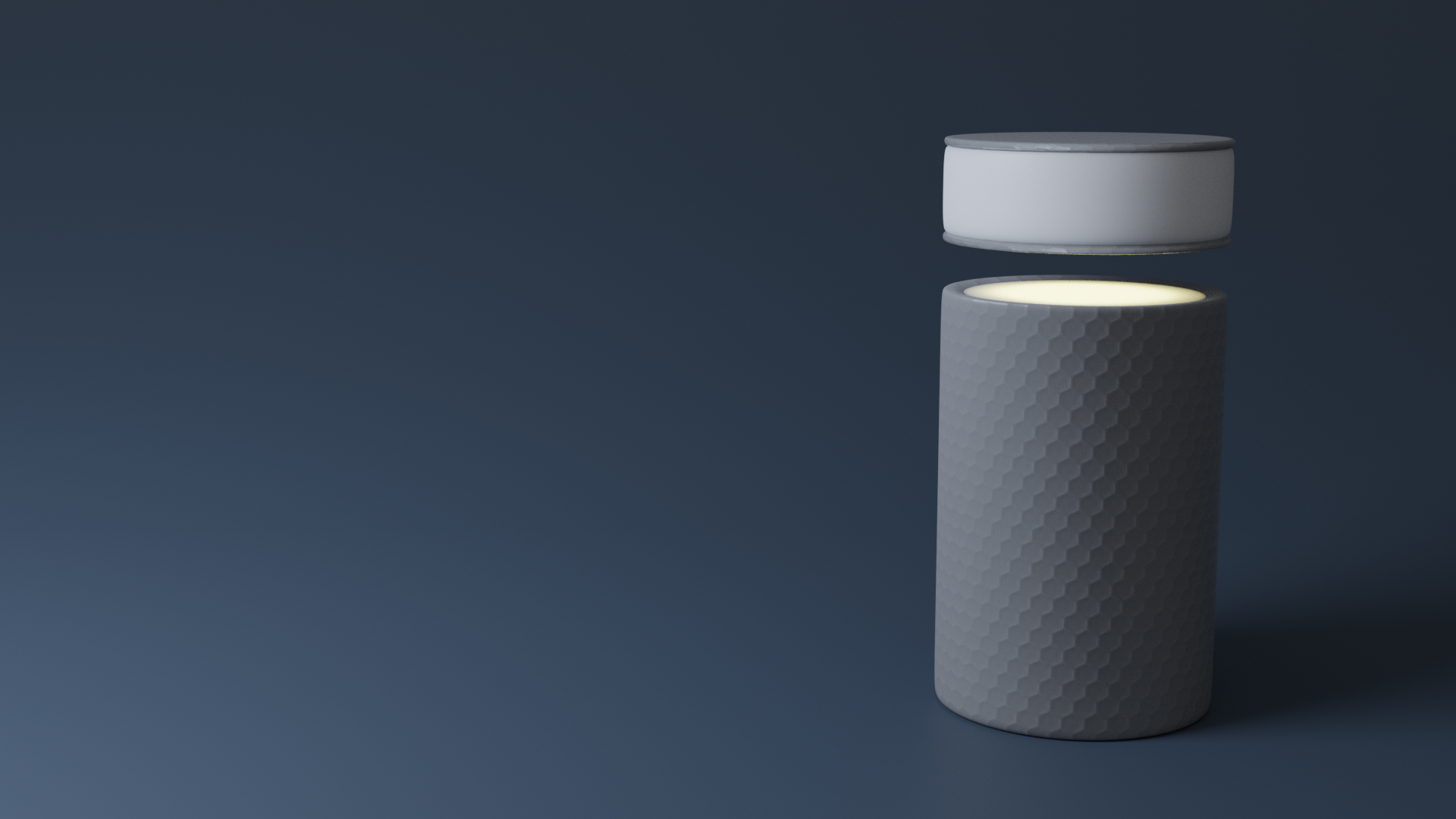

With requirements in hand, it was time for brainstorming. The goal of this brainstorming session was to ideate on what the device would look like and how it would differentiate between the modes. I tried to think about how to represent active mode and passive mode using the voice assistant’s physical shape. This led to ideas like the design that looks like it has a raised arm when in active mode. In the end I came up with an idea that was more futuristic, with the device having two parts: a base and a smaller top part that hovers above the base, which can also light up (shown to the right). We later chose to go forward with this design.

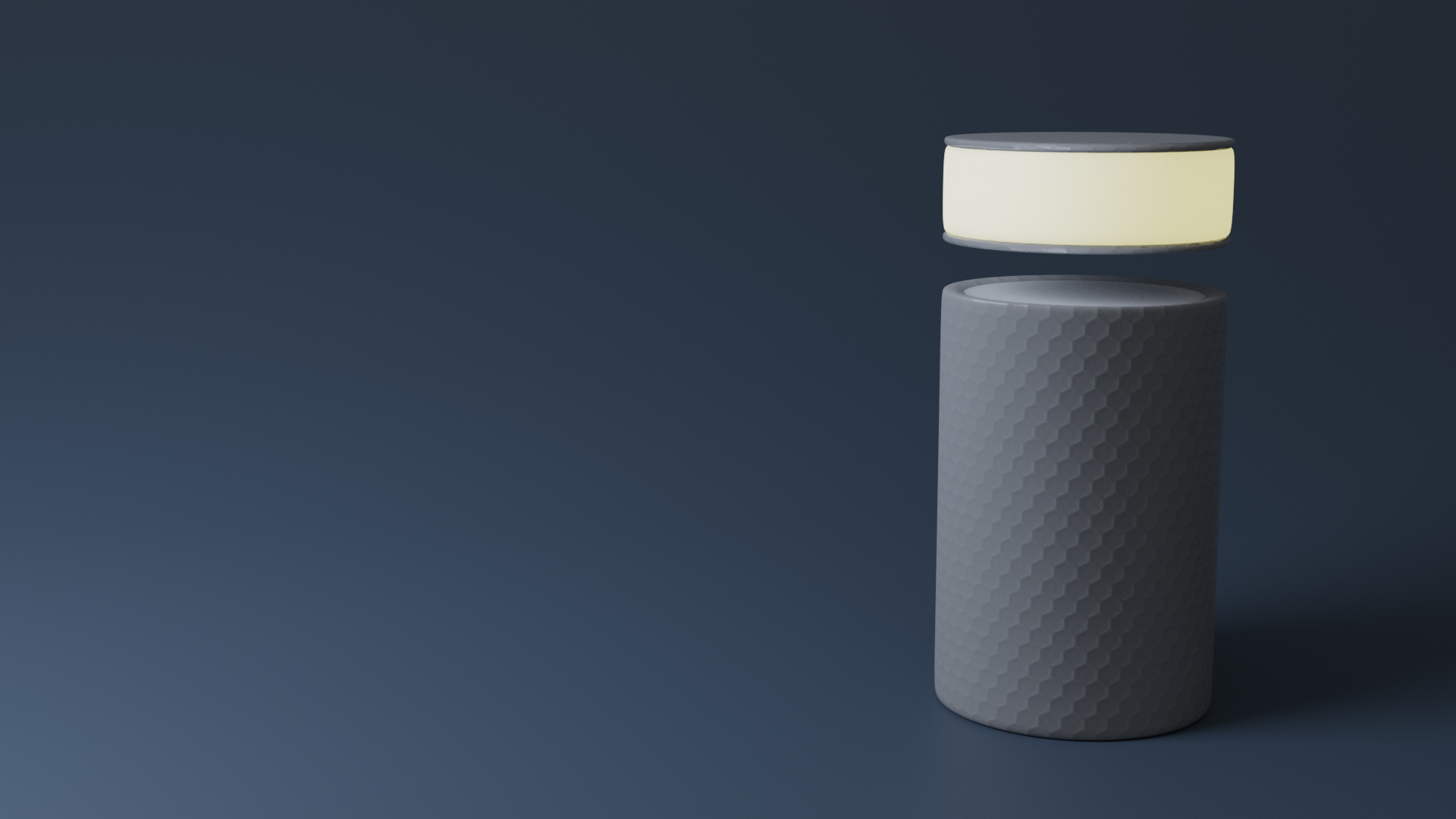

Prototyping - paper and 3D prototype

We began the prototyping by creating a paper prototype out of paper and cardboard. The goal of this prototype was for us to get a sense of the scale and physicality of the device.

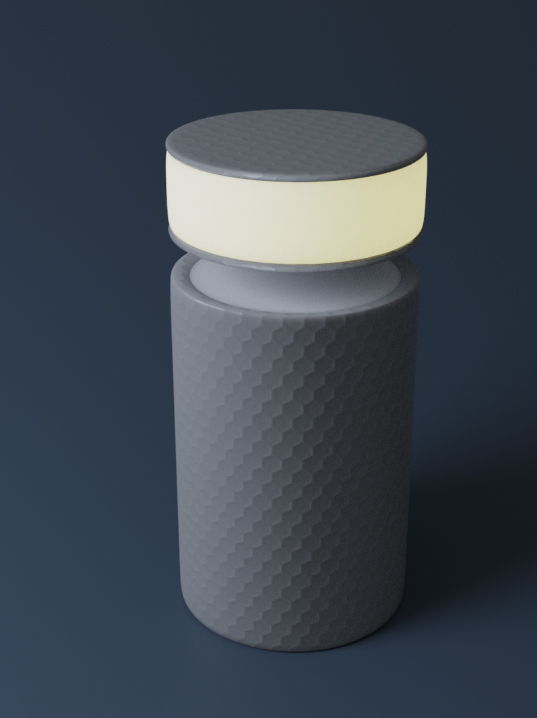

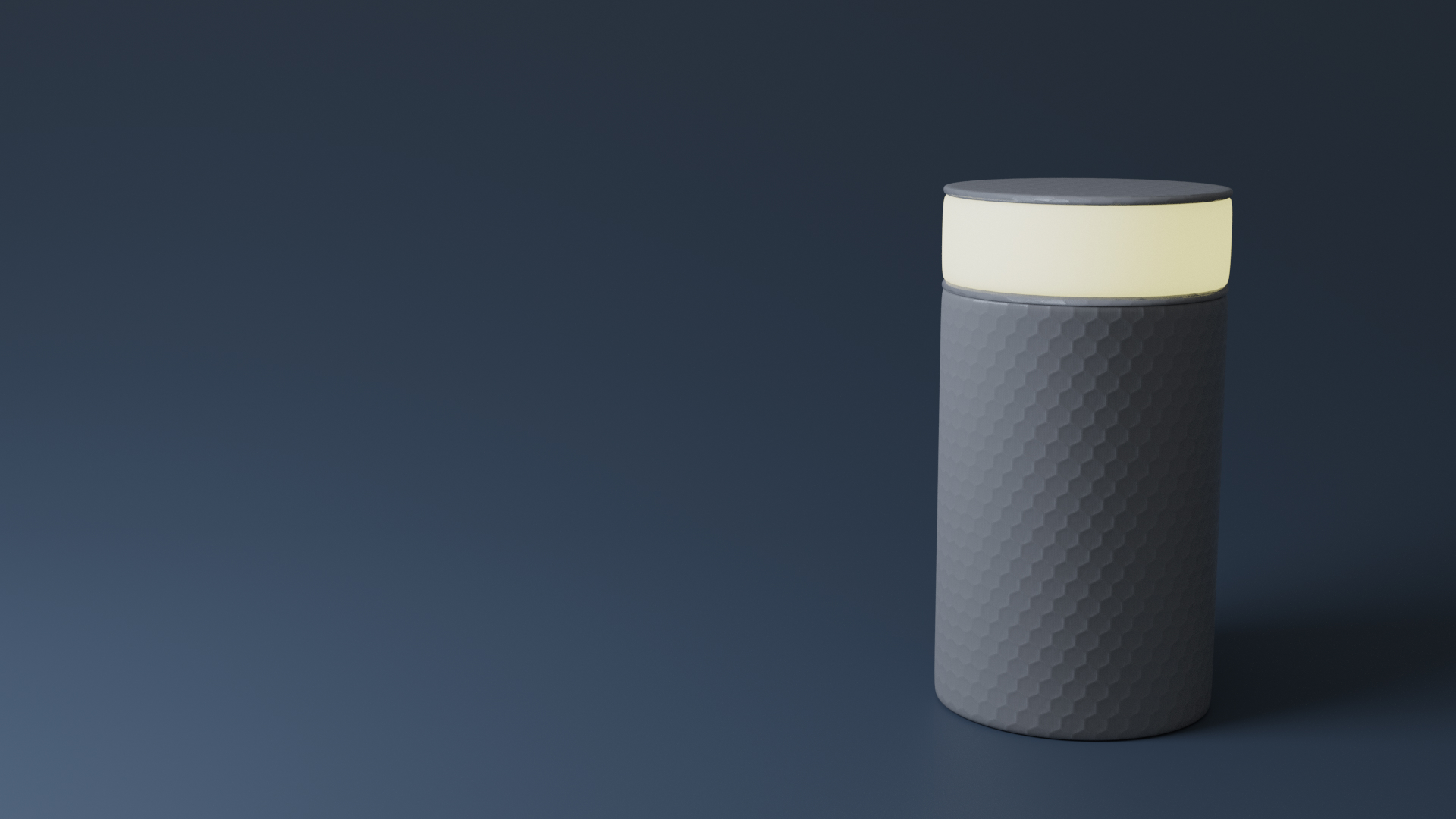

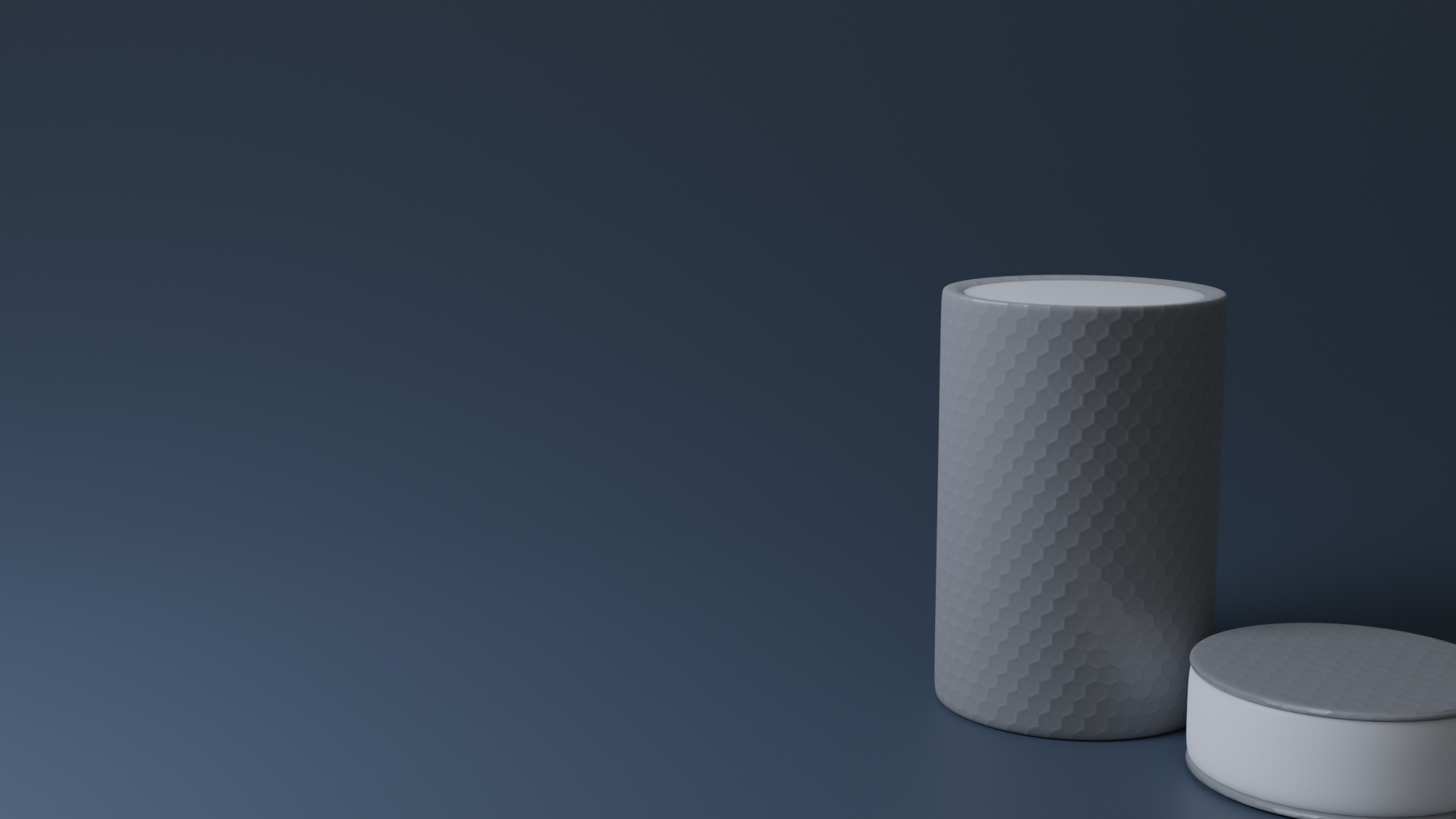

We also made a 3D render of the device with the goal of exploring the aesthetics of the device and what materials could potentially be used. The renders were made in Blender.

Evaluation - interviews and thematic analysis

After the prototypes, we conducted some more interviews, this time more summative in nature rather than formative. We looked at how easy it was to understand how the lights and hovering part related to the modes, and also at what people thought about the device in general and whether or not they would be comfortable with having one in their home. The findings from these interviews can be seen in the results of this case study.

The Result

The final design and the functionality of Charlie the voice assistant can be viewed below. The findings from the final evaluation can be viewed further down.

General functionality

2 modes

- Passive mode

- Active mode

Registered voices

- Users can register their voice

- Mode is switched automatically

The most important thing about how the device works is that there are two modes. We have decided to call them active mode and passive mode. The other thing you need to know is that you can register your voice as a user, which you do either by a theoretical mobile app, or by simply saying “I want to register as a user”. This is done so that the device is able to switch automatically from active mode to passive mode, which I will get to shortly.

Active mode

Always listening, not just for prompt words

- Top part lights up to signal that it is listening when prompted

Can provide services proactively, either by:

- Simply speaking up

- Signaling by light beforehand

The active mode is basically the “always listening” and “proactive” part of the device. Meaning, it doesn’t require a prompt word to hear what you say, or for it to provide services. Now, you can still prompt it by saying “Hey Charlie”, at which point the top part will light up to signal that it is listening for a specific command. As for the device being proactive when it thinks that it can be of service, the user can choose between letting the device simply say it directly, or by first signaling that it has something to say.

Active mode - signal

If the user chooses the signal, the inside part of the device will light up to signal to the user that it has something to say, at which point the user can say something like “Did you have something to say Charlie?” if they want to hear it.

Passive mode

- Only listens for prompt words

- Only speaks when spoken to

- Lights up to signal that it is listening when prompted

- Will not provide services proactively

When the device is in passive mode, it basically functions just like a regular voice assistant device, meaning it only listens for prompt words and only speaks when spoken to. Just like in active mode, once you prompt it, the top part lights up to tell you that it is listening for a command.

Switching between modes

As I mentioned before, the device can switch automatically from active to passive mode, and for this it needs to have the function of letting users register. The idea is that if you have guests over, you probably don’t want the device to interrupt your conversation, and the guests might also not feel comfortable with a device that is always listening. So, if the device hears any users that aren’t registered, the top part of the device begins moving down, and the device goes into passive mode.

Switched off

Finally, you can of course turn the device off, and you simply do this by removing the top part from the bottom part.

Findings from final evaluation

Attitude towards device:

- In general, people felt positive towards it.

- One participant felt negative towards it due to the privacy concerns.

- One participant felt that the device was unnecessary.

Modes:

- The modes were thought to be rather intuitive. It made sense that it was in active mode when it was “open” and passive mode when it was “closed”.

- There were some concerns that you wouldn’t be able to tell which mode it is in when you can’t see the device or you are far away from it.

- Switching between modes automatically was appreciated.

- Transition between passive to active mode needs to be clearer. One participant said “I don’t want it to switch to active mode without me knowing”.

Lights:

- The lights were thought to be intuitive and easy to learn.

- Having different colored lights could help distinguish between the light for the signal and the light for when it is listening, especially when one looks at the device from a distance.

- One concern is that it would be difficult to know whether Charlie lit up a few seconds ago or if it had something to say an hour ago.